By adding an LLM generated vector representation (embedding) of your data to your database, you will be able to implement Retrieval Augmented Generation. This technique will make it possible for you to provide the LLM with all the contextual data when submitting a query or question.

For instance, you can create embeddings based on all your product titles, descriptions, and specifications. And magically the LLM will be able to answer detailed questions about your entire products catalogue. Combine this with a flow that retrieves your order history and submits this to the LLM as well, you will be able to ask a simple question as “Are the shoes I bought waterproof” and the LLM will give you a precise answer.

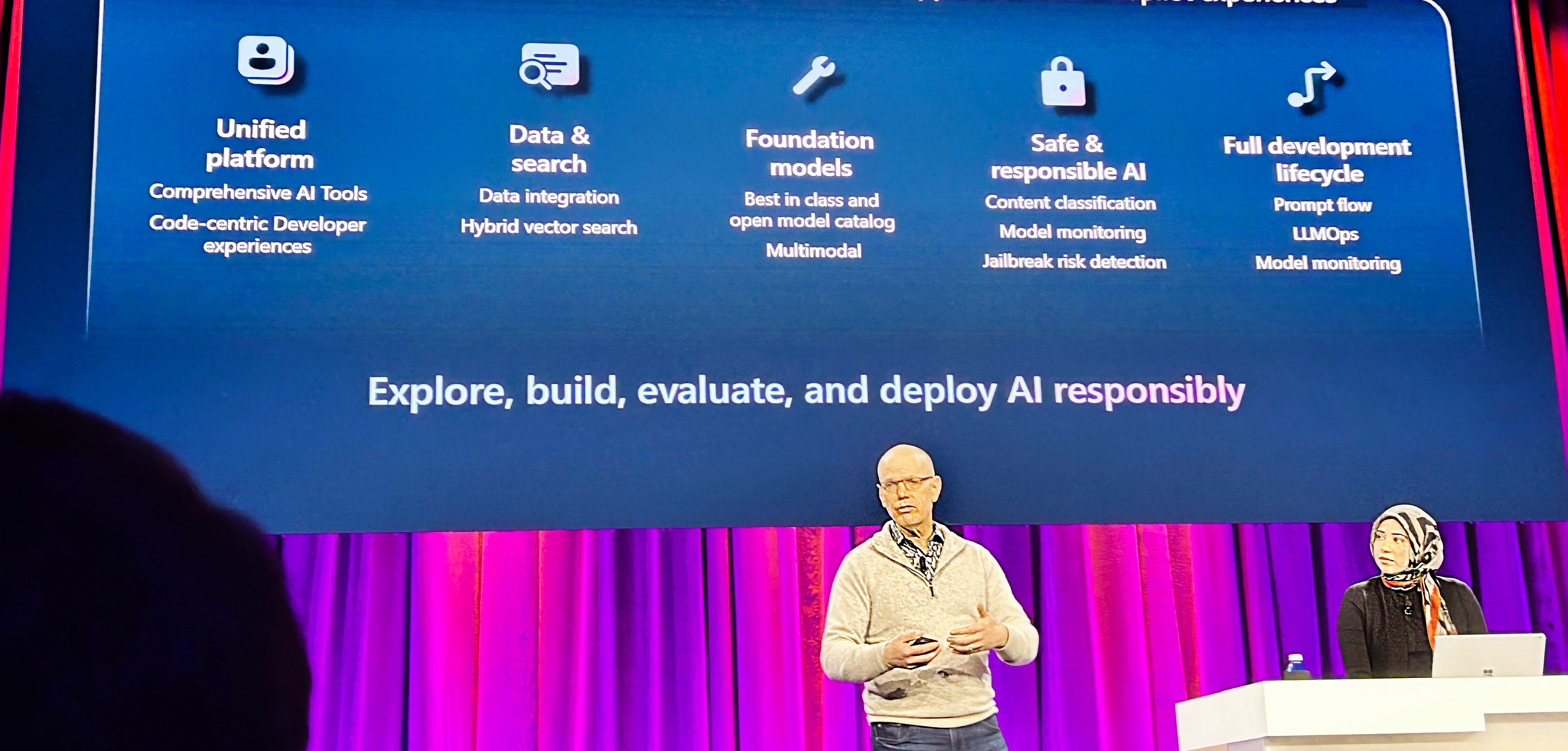

The changes announced in this area was that embeddings and vector search will be supported in PostgreSQL, Cosmos DB, the new MongoDB on Cosmos vCore offering, and of course in Azure AI search (formerly known as Cognitive search). So, it will be really easy to add and maintain an embedding representation of your data in a way that matches your current infrastructure and needs.